Changing the concept URI of an existing Wikibase with data

Many users of Wikibase find themselves in a position where they need to change the concept URI of an existing Wikibase for one or more reasons, such as a domain name update or desire to have https concept URIs instead of HTTP.

Below I walk through a minimal example of how this can be done using a small amount of data and the Wikibase Docker images. If you are not using the Docker images the steps should still work, but you do not need to worry about copying files into and out of containers or running commands inside containers.

Creating some test data

Firstly I need some test data, and for that data to exist in Wikibase and the Query service. I’ll go ahead with 1 property and 1 item, with some labels, descriptions and a statement.

# Create a string property with english label

curl -i -X POST \

-H "Content-Type:application/x-www-form-urlencoded" \

-d "data={\"type\":\"property\",\"datatype\":\"string\",\"labels\":{\"en\":{\"language\":\"en\",\"value\":\"String, property label EN\"}},\"descriptions\":{},\"aliases\":{},\"claims\":{}}" \

-d "token=+\" \

'http://localhost:8181/w/api.php?action=wbeditentity&new=property&format=json'

# Create an item with label, description and statement using above property

curl -i -X POST \

-H "Content-Type:application/x-www-form-urlencoded" \

-d "data={\"type\":\"item\",\"labels\":{\"en\":{\"language\":\"en\",\"value\":\"Some item\"}},\"descriptions\":{\"en\":{\"language\":\"en\",\"value\":\"Some item description\"}},\"aliases\":{},\"claims\":{\"P1\":[{\"mainsnak\":{\"snaktype\":\"value\",\"property\":\"P1\",\"datavalue\":{\"value\":\"Statement string value\",\"type\":\"string\"},\"datatype\":\"string\"},\"type\":\"statement\",\"references\":[]}]},\"sitelinks\":{}}" \

-d "token=+\" \

'http://localhost:8181/w/api.php?action=wbeditentity&new=item&format=json'Code language: PHP (php)Once the updater has run (by default it sleeps for 10 seconds before checking for changes) the triples can be seen in blazegraph using the following SPARQL query.

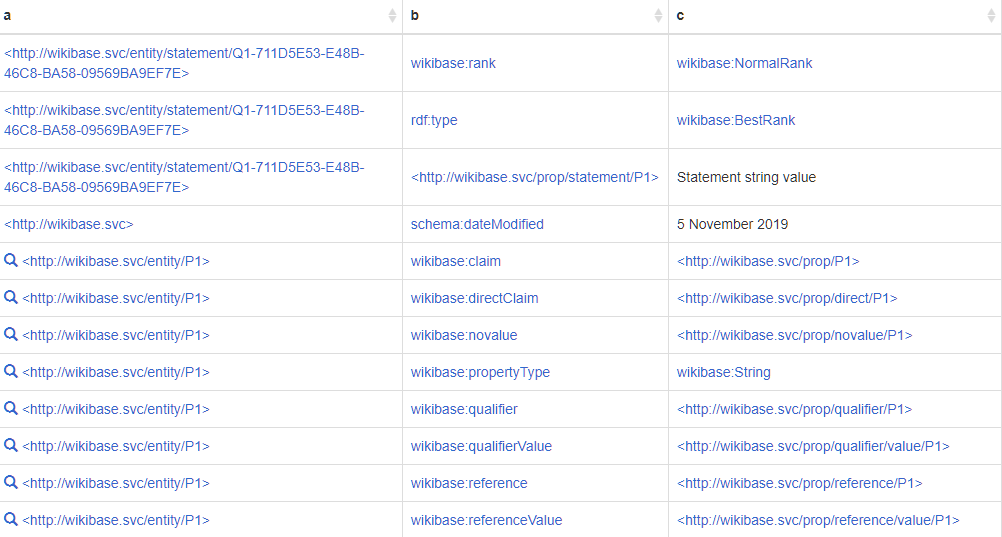

SELECT * WHERE {?a ?b ?c}

The concept URI is clearly visible in the triples as the default ‘wikibase.svc’ provided by the docker-compose example for wikibase.

Running a new query service

You could choose to load the triples with a new concept URI into the same queryservice and namespace. However to simplify things, specifically, the cleanup of old triples, a clean and empty query service is also a good choice.

In my docker-compose file, I will specify a new wdqs service with a new name and altered set of environment variables, with the WIKIBASE_HOST environment variable changed to the new URI.

wdqs-new:

image: wikibase/wdqs:0.3.2

volumes:

- query-service-data-new:/wdqs/data

command: /runBlazegraph.sh

networks:

default:

aliases:

- wdqs-new.svc

environment:

- WIKIBASE_HOST=somFancyNewLocation.foo

- WDQS_HOST=wdqs-new.svc

- WDQS_PORT=9999

expose:

- 9999Code language: PHP (php)This query service makes use of a new docker volume that I also need to define in my docker-compose.

volumes:

< ....... <other volumes here> ....... >

query-service-data-new:Code language: JavaScript (javascript)As this URI is actually fake, and also in order to keep my updater requests within the local network I also need to add a new network alias to the existing wikibase service. After doing so my wikibase network section will look like this.

networks:

default:

aliases:

- wikibase.svc

- somFancyNewLocation.fooCode language: CSS (css)To apply the changes I’ll restart the wikibase service and start the new updater service using the following commands.

$ docker-compose up -d --force-recreate --no-deps wikibase

Recreating wdqsconceptblog2019_wikibase_1 ... done

$ docker-compose up -d --force-recreate --no-deps wdqs-new

Creating wdqsconceptblog2019_wdqs-new_1 ... doneCode language: JavaScript (javascript)Now 2 blazegraph query services will be running, both controlled by docker-compose.

The published endpoint, via the wdqs-proxy, is still pointing at the old wdqs service, as is the updater that is currently running.

Dumping RDF from Wikibase

The dumpRdf.php maintenance script in Wikibase repo allows the dumping of all Items and properties as RDF for use in external services, such as the query service.

The default concept URI for Wikibase is determined from the wgServer MediaWiki global setting [code]. Before MediaWiki 1.34 wgServer was auto-detected [docs] in PHP.

Thus when running a maintenance script, wgServer is unknown, and will default to the hostname the wikibase container can see, for example, “b3a2e9156cc1”.

In order to avoid dumping data with this garbage concept URI one of the following must be done:

- Wikibase repo conceptBaseUri setting must be set (to the new concept URI)

- MediaWiki wgServer setting must be set (to the new concept URI)

- –server <newConceptUriServerBase> must be provided to the dumpRdf.php script

So in order to generate a new RDF dump with the new concept URI, and store the RDF in a file run the following command.

$ docker-compose exec wikibase php ./extensions/Wikibase/repo/maintenance/dumpRdf.php --server http://somFancyNewLocation.foo --output /tmp/rdfOutput

Dumping entities of type item, property

Dumping shard 0/1

Processed 2 entities.Code language: JavaScript (javascript)The generated file can then be copied from the wikibase container to the local filesystem using the docker cp command and the name of the wikibase container for your setup, which you can find using docker ps.

docker cp wdqsconceptblog2019_wikibase_1:/tmp/rdfOutput ./rdfOutputCode language: JavaScript (javascript)Munging the dump

In order to munge the dump, first I’ll copy it into the new wdqs service with the following command.

docker cp ./rdfOutput wdqsconceptblog2019_wdqs-new_1:/tmp/rdfOutputCode language: JavaScript (javascript)And then run the munge script over the dump, specifying the concept URI.

$ docker-compose exec wdqs-new ./munge.sh -f /tmp/rdfOutput -d /tmp/mungeOut -- --conceptUri http://somFancyNewLocation.foo

#logback.classic pattern: %d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n

16:21:31.082 [main] INFO org.wikidata.query.rdf.tool.Munge - Switching to /tmp/mungeOut/wikidump-000000001.ttl.gzCode language: PHP (php)The munge step will batch the data into a set of chunks based on a configured size. It also alters some of the triples along the way. The changes are documented here. If you have more data you may end up with more chunks.

Loading the new query service

Using the munged data and the loadData.sh script, the data can now be loaded directly into the query service.

$ docker-compose exec wdqs-new ./loadData.sh -n wdq -d /tmp/mungeOut

Processing wikidump-000000001.ttl.gz

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd"><html><head><meta http-equiv="Content-Type" content="text/html;charset=UTF-8"><title>blazegraph™ by SYSTAP</title

></head

><body<p>totalElapsed=193ms, elapsed=75ms, connFlush=0ms, batchResolve=0, whereClause=0ms, deleteClause=0ms, insertClause=0ms</p

><hr><p>COMMIT: totalElapsed=323ms, commitTime=1572971213838, mutationCount=43</p

></html

>File wikidump-000000002.ttl.gz not found, terminatingCode language: PHP (php)A second updater

Currently, I have 2 query services running. The old one, which is public and still being updated by an updater, and the new one which is freshly loaded and slowly becoming out of date.

To create a second updater that will run alongside the old updater I define the following new service in my docker-compose file, which points to the new wikibase hostname and query service backend.

wdqs-updater-new:

image: wikibase/wdqs:0.3.2

command: /runUpdate.sh

depends_on:

- wdqs-new

- wikibase

networks:

default:

aliases:

- wdqs-updater-new.svc

environment:

- WIKIBASE_HOST=somFancyNewLocation.foo

- WDQS_HOST=wdqs-new.svc

- WDQS_PORT=9999Code language: PHP (php)Starting it with a command I have used a few times in this blog post.

$ docker-compose up -d --force-recreate --no-deps wdqs-updater-new

Creating wdqsconceptblog2019_wdqs-updater-new_1 ... doneCode language: JavaScript (javascript)I can confirm using ‘docker ps’ and also by looking at the container logs that the new updater is running.

docker-compose ps | grep wdqs-new

wdqsconceptblog2019_wdqs-new_1 /entrypoint.sh /runBlazegr ... Up 9999/tcpCode language: JavaScript (javascript)Using the new query service

You might want to check your query service before switching live traffic to it to make sure everything is OK, but I will skip that step.

In order to direct traffic to the newly loaded and now updating query service all that is needed is to reload the wdqs proxy with the new backend host using the wdqs-proxy docker image, this can be done with PROXY_PASS_HOST.

wdqs-proxy:

image: wikibase/wdqs-proxy

environment:

- PROXY_PASS_HOST=wdqs-new.svc:9999

ports:

- "8989:80"

depends_on:

- wdqs-new

networks:

default:

aliases:

- wdqs-proxy.svcCode language: JavaScript (javascript)And the service can be restarted with that same old command.

$ docker-compose up -d --force-recreate --no-deps wdqs-proxy

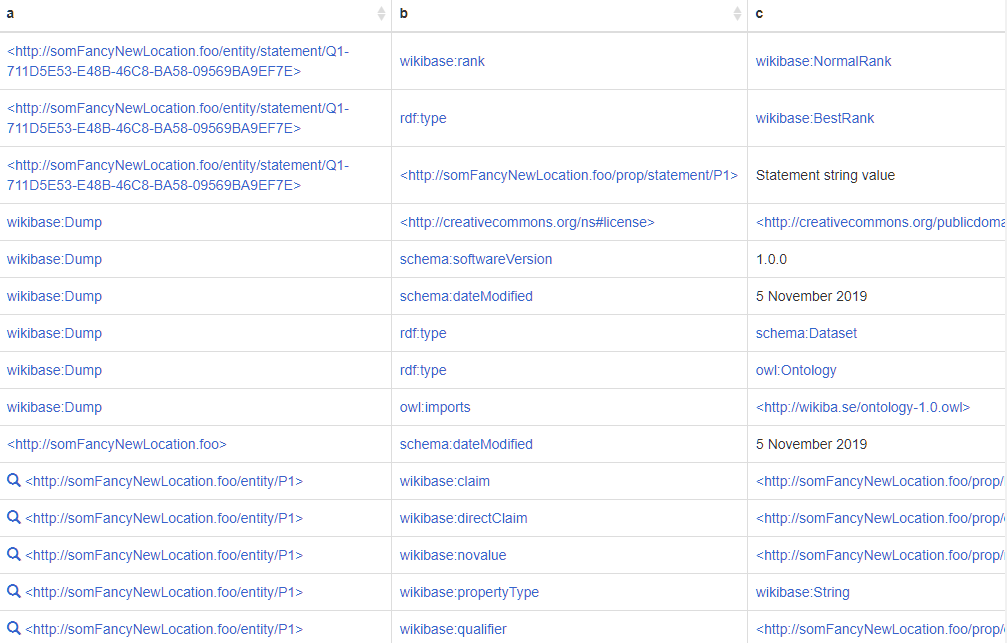

Recreating wdqsconceptblog2019_wdqs-proxy_1 ... doneRunning the same query in the UI will now return results with the new concept URIs.

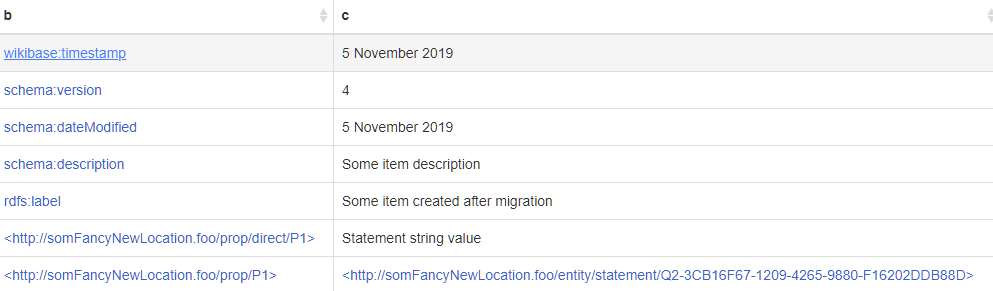

And if I make a new item (Q2) I can also see this appear in the new query service with the correct concept URI.

curl -i -X POST \

-H "Content-Type:application/x-www-form-urlencoded" \

-d "data={\"type\":\"item\",\"labels\":{\"en\":{\"language\":\"en\",\"value\":\"Some item created after migration\"}},\"descriptions\":{\"en\":{\"language\":\"en\",\"value\":\"Some item description\"}},\"aliases\":{},\"claims\":{\"P1\":[{\"mainsnak\":{\"snaktype\":\"value\",\"property\":\"P1\",\"datavalue\":{\"value\":\"Statement string value\",\"type\":\"string\"},\"datatype\":\"string\"},\"type\":\"statement\",\"references\":[]}]},\"sitelinks\":{}}" \

-d "token=+\" \

'http://localhost:8181/w/api.php?action=wbeditentity&new=item&format=json'Code language: JavaScript (javascript)

Cleanup

I left some things lying around that are no longer needed that I should cleanup. These include docker containers, docker volumes and files.

First the containers.

$ docker-compose stop wdqs-updater wdqs

Stopping wdqsconceptblog2019_wdqs-updater_1 ... done

Stopping wdqsconceptblog2019_wdqs_1 ... done

$ docker-compose rm wdqs-updater wdqs

Going to remove wdqsconceptblog2019_wdqs-updater_1, wdqsconceptblog2019_wdqs_1

Are you sure? [yN] y

Removing wdqsconceptblog2019_wdqs-updater_1 ... done

Removing wdqsconceptblog2019_wdqs_1 ... doneThen the volume. Note, this is a permanent removal of any data stored in the volume.

$ docker volume ls | grep query-service-data

local wdqsconceptblog2019_query-service-data

local wdqsconceptblog2019_query-service-data-new

$ docker volume rm wdqsconceptblog2019_query-service-data

wdqsconceptblog2019_query-service-dataCode language: JavaScript (javascript)And other files, also being permanently removed.

$ rm rdfOutput

$ docker-compose exec wikibase rm /tmp/rdfOutput

$ docker-compose exec wdqs-new rm /tmp/rdfOutput

$ docker-compose exec wdqs-new rm -rf /tmp/mungeOutCode language: JavaScript (javascript)I then also removed these services and volumes from the docker-compose yml file.

Things to consider

- This process will take longer on larger wikibases.

- If not using docker, you will have to run each query service on a different port.

- This post was using wdqs 0.3.2. Future versions will likely work in the same way, but past versions may not.

I have a question regarding setting up a local staging environment: for all services based on the wikibase/wdqs container, what does the WIKIBASE_HOST environment variable define? A host that will be contacted for API calls, or a host that defines a base URL for queries and RDF?

The default ‘wikibase.svc’ is changed to ‘somFancyNewLocation.foo’ above. What does that actually do?

At the time this blog post was written (and for the wdqs version it was written for) the WIKIBASE_HOST env var refers to both the concept URI to be expected and also the URL location to fetch RDF from.

In newer versions of WDQS there are a couple of alternate setting that can be passed into scripts to do a variety of different things there.

Great article! I am demoing this process and wonder if its normal that a 3G rdf file would take longer than 4hrs to munge. I haven’t got any output since the script first started.

bash-4.4# ./munge.sh -c 50000 -f /data/dump.rdf -d /data/mungeOut

#logback.classic pattern: %d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} – %msg%n

15:55:53.930 [main] INFO org.wikidata.query.rdf.tool.Munge – Switching to /data/mungeOut/wikidump-000000001.ttl.gz

NVM I found my issue. You have to make sure the process can write to directory where you are saving the munged data.

Thank you for this!

Some comments that might be relevant for newer Wikibase installs:

I guess wdqs-new should now be defined now in docker-compose.extra.yml. In general, the wdqs related services need to be added/edited in docker-compose.extra.yml.

For the docker-compose commands that require services in docker-compose.extra.yml, it is necessary to add “docker-compose -f docker-compose.yml -f docker-compose.extra.yml …” in order to indicate the extra compose file and its dependency.

Each time “somFancyNewLocation.foo” is mentioned, another option is to use ${WIKIBASE_HOST} (or create a new temporary variable ${WIKIBASE_NEW_HOST}) and set the variable in .env to the value “somFancyNewLocation.foo” or whatever its replacement should be.

In the command “docker-compose exec wikibase php” the argument “–output /tmp/rdfOutput” should be a file rather than a directory. (My mistake adding a slash “/tmp/rdfOutput/” but it looks like a directory from the example.)

When creating wdqs-updater-new, it might be useful to copy over “restart: unless-stopped” for a more reliable service and rather use “WIKIBASE_HOST=${WIKIBASE_HOST}” defining the variable via .env.

As a last step, values for “wikibase/wdqs:0.3.2” in wdqs(-new) and wdqs-updater(-new) can be replaced by “${WDQS_IMAGE_NAME}” (or a copy) defined via .env.

Thanks for the comment and I hope this helps some of the folks that are using the current wmde provided docker-compose examples for a Wikibase installation.

The instructions that I wrote were for a more generic docker-compose setup that doesn’t necessarily line up with any of the wmde provided examples.