Twitter bot powered by Github Actions (WikidataMeter)

Recently 2 new Twitter bots appeared in my feed, fullyjabbed & fullyjabbedUK, created by iamdanw and powered entirely by Github Actions (code).

I have been thinking about writing a Twitter bot for some time and decided to copy this pattern running a cron based Twitter bot on Github Actions, with an added bit of free persistence using jsonstorage.net.

This post if my quick walkthrough of my new bot, WikidataMeter, what it does and how it works. You can find the code version when writing this blog post here, and the current version here.

1) The idea (WikidataMeter)

Wikidata is a free and open knowledge base, kind of like Wikipedia, but for structured data. I have been working on the project team for some years now. When the project reached 1 billion edits in the past year it was quite nice seeing this milestone on Twitter.

Thus my bot is going to automatically tweet such milestones! Starting with a tweet every 1 million edits.

2) Groundwork (Libs and Infra)

I spend most of my time working in PHP currently, so decided to setup a composer project in a new Github repository. This code is going to need to talk to the Twitter and Wikidata APIs, as well as somehow persistently store information about its last tweets (to make sure I don’t tweet about the same milestone multiple times).

For API access I chose to use addwiki/mediawiki-api-base for Wikidata, which is a library I wrote and am currently pushing toward a new v3 release. atymic/twitter looked like the most used Twitter library, so that’ll probably work.

Persisting data from bot runs on Github Actions was slightly more challenging. I could obviously pay for some simple storage service, but that likely has a cost & some “complex” setup. I could also try using storage / caching within Github Actions, but this might not be super persistent?

After a little bit of web searching and looking at various offerings I came across jsonstorage.net which advertises itself as “a simple web storage” which is seemingly free and accessible via a super simple REST Api. This is the one! and after checking with the sites creator it’s been around for quite a while, so unlikely to disappear anytime soon…

You’ll also need to get setup with a Twitter developer account on a new bot account in order to actually send tweets!.

Now I’m all set to actually write code.

3) Logic (The Code)

For a first version everything is going to exist within a single PHP script that can easily be executed via the CLI. Lets call it run.php. (code at time of writing this post)

There are going to be various secrets such as API tokens, object names and usernames that the script will need access to. I’m going to store those as environment variables, and for development simplify loading them using the vlucas/phpdotenv PHP library which loads a .env file into your PHP environment.

// Load environment from a file (only if it exists)

$dotenv = Dotenv\Dotenv::createUnsafeImmutable(__DIR__);

$dotenv->safeLoad();Code language: PHP (php)Next I need some information to create a tweet with. The number of revisions currently on Wikidata.

$wd = MediaWiki::newFromEndpoint( 'https://www.wikidata.org/w/api.php' )->action();

$wdStatistics = $wd->request( ActionRequest::simpleGet( 'query', [ 'meta' => 'siteinfo', 'siprop' => 'statistics' ] ) )['query']['statistics'];

$wdEdits = $wdStatistics['edits'];

Code language: PHP (php)Now I need to make sure that I haven’t tweeted about the latest milestone to avoid duplicates. For that I need some persistence.

There is currently no PHP library for jsonstorage.net, but it’s a fairly simple REST Api, so I can just use the Guzzle http library. I also need to go and create an account and a non public empty JSON object within the jsonstorage.net app.

$store = new Guzzle([ 'base_uri' =>

'https://api.jsonstorage.net/v1/json/' .

getenv('JSONSTORAGE_OBJECT') .

'?apiKey=' . getenv('JSONSTORAGE_KEY')

]);Code language: PHP (php)Then I get get data from the store. And if some of the data is not yet initialized, I can initialize it with some current data.

$data = json_decode( $store->request('GET')->getBody(), true );

$data['wdEdits'] = array_key_exists('wdEdits',$data) ? $data['wdEdits'] : $wdEdits;

// Example writing data back (not done until the end of the script)

$store->request('PUT', '', [ 'body' => json_encode( $data ), 'headers' => [ 'content-type' => 'application/json; charset=utf-8' ] ]);Code language: PHP (php)Now I can actually make the decision, to tweet, or not to tweet, and also update the persistent data if I do schedule the tweet.

$toPost = [];

if ( intdiv($wdEdits, 1000000) > intdiv($data['wdEdits'], 1000000) ) {

$roundNumber = floor($wdEdits/1000000)*1000000;

$formatted = number_format($roundNumber);

$toPost[] = <<<TWEET

Wikidata now has over ${formatted} edits!

You can find the milestone edit here https://www.wikidata.org/w/index.php?diff=${roundNumber}

TWEET;

$data['wdEdits'] = $wdEdits;

}Code language: PHP (php)And then if a tweet was generated, send it!

$tw = new Twitter(

TwitterConf::fromLaravelConfiguration(

require_once __DIR__ . '/vendor/atymic/twitter/config/twitter.php'

)

);

foreach( $toPost as $tweetText ) {

echo "Tweeting: ${tweetText}" . PHP_EOL;

$tw->postTweet([

'status' => $tweetText

]);

}Code language: PHP (php)Finally I can store any changed data before the next run.

$store->request('PUT', '', [ 'body' => json_encode( $data ), 'headers' => [ 'content-type' => 'application/json; charset=utf-8' ] ]);Code language: PHP (php)Result!

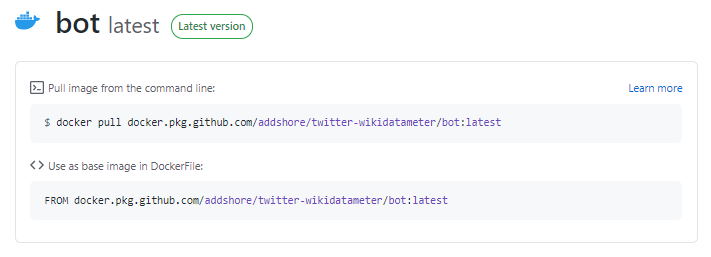

4) Deployment (Container)

So the Github Action doesn’t have to install the PHP composer dependencies every time it runs I decided to create a container image with the code and dependencies loaded.

This needed a tiny Dockerfile

FROM composer:2.0 as composer

WORKDIR /app/

COPY composer.* ./

# --ignore-platform-reqs as composer image uses PHP 8

RUN composer install --ignore-platform-reqs

FROM php:7.4

WORKDIR /app/

COPY . /app

COPY --from=composer /app/vendor /app/vendor

ENTRYPOINT [ "php" ]

CMD [ "run.php" ]Code language: PHP (php)And a Github action workflow to build it. (Not included inline here as it is a bit long, and is just a modified example workflow).

5) Deployment (Cron)

In order to run the bot on Github actions I need to put all of the secrets needed for environment variables into the secret settings. I make sure of an action to then add these secrets to a .env file which is read by docker when running the built image.

name: Bot Run

on:

workflow_dispatch:

schedule:

- cron: '30 * * * *' # At minute 30 (once an hour)

jobs:

run:

runs-on: ubuntu-latest

steps:

- name: Make envfile

uses: SpicyPizza/create-envfile@v1

with:

envkey_JSONSTORAGE_KEY: ${{ secrets.JSONSTORAGE_KEY }}

envkey_JSONSTORAGE_OBJECT: ${{ secrets.JSONSTORAGE_OBJECT }}

envkey_TWITTER_ACCESS_TOKEN: ${{ secrets.TWITTER_ACCESS_TOKEN }}

envkey_TWITTER_ACCESS_TOKEN_SECRET: ${{ secrets.TWITTER_ACCESS_TOKEN_SECRET }}

envkey_TWITTER_CONSUMER_KEY: ${{ secrets.TWITTER_CONSUMER_KEY }}

envkey_TWITTER_CONSUMER_SECRET: ${{ secrets.TWITTER_CONSUMER_SECRET }}

envkey_TWITTER_USER: ${{ secrets.TWITTER_USER }}

file_name: .env

- name: Log into registry

run: echo "${{ secrets.GITHUB_TOKEN }}" | docker login docker.pkg.github.com -u ${{ github.actor }} --password-stdin

- name: Pull the bot image

run: docker pull docker.pkg.github.com/addshore/twitter-wikidatameter/bot:latest

- name: Run the bot image

run: docker run --rm --env-file .env docker.pkg.github.com/addshore/twitter-wikidatameter/bot:latestCode language: PHP (php)And now the bot code should run every 30 minuites!

Result

I look forward to adding more tweets and refinements to this bot in the future.

There is already a request for Mastodon support and ideas for other milestones to add.

[…] I first heard about jsonstorage.net when searching around for a quick place to persist some data while writing a Twitter bot. […]