What happens in Wikibase when you make a new Item?

A recent Wikibase email list post on the topic of Wikibase and bulk imports caused me to write up a mostly human readable version of what happens, in what order, and when, for Wikibase action API edits, for the specific case of item creation.

There are a fair few areas that could be improved and optimized for a bulk import use case in the existing APIs and code. Some of which are actively being worked on today (T285987). Some of which are on the roadmap, such as the new REST APIs for Wikibase. And others which are out there, waiting to be considered.

This post is is written looking at Wikibase and MediaWiki 1.36 with links to Github for code references. Same areas may be glossed over or even slightly inaccurate, so take everything here with a pinch of salt.

Reach out to me on Twitter if you have questions or fancy another deep dive.

Level 1: The API

As a Wikibase user, you request api.php in MediaWiki as part of a web request specifying action=wbeditentity. (see the wikidata.org docs)

api.php sets up MediaWiki, and calls the correct API module execute method, per configuration from the Wikibase extension for wbeditentity.

wbeditentity is currently the API module that handles entity creation and is powered by the EditEntity class, which shares a common base class, ModifyEntity, and thus common set of interactions with most other entity modifying action API modules.

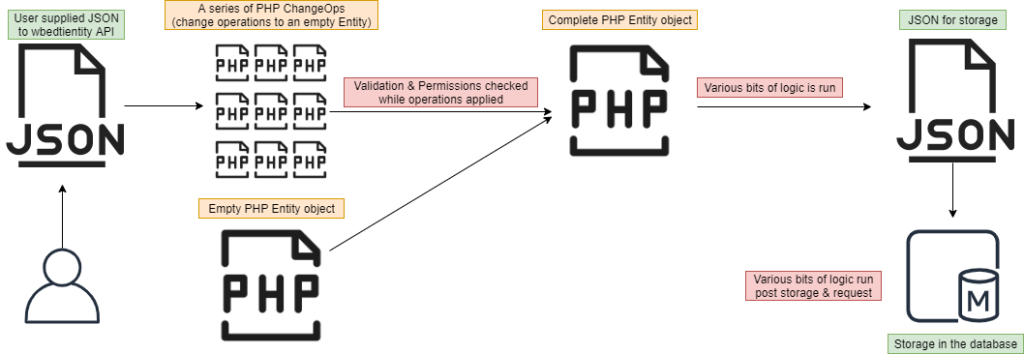

ModifyEntity::execute is thus run. This includes:

- parameter validation

- creation of an empty Item (which could end up minting a new Qid)

- transformation of the user supplied JSON into change operations for the new Item

- permission checks for the user

- applying the change operations to the new Item object

- saving of the Item (via a helper), which generates a summary, does a few other small things and ultimately calls the business layer to actually save.

- reporting back to the user.

Most of the juicy and expensive stuff happens as part of actually saving the Item, which is covered in more detail below.

A quick segway to ChangeOps

The transformation from user supplied JSON into change operations is an area that could drastically improve edit times. Though removing this for many usecases may not be simple, as there are various other bits of logic applied around these ChangeOps and also the saving the resulting Entity object (which we are coming to next)

When creating an Item, the ItemChangeOpDeserializer is used (per the entity type definition). This in turn uses a number of other ChangeOpDerializers to pick appart the JSON supplied by the user into individual changes to an entity.

The level of granularity and processing / logic here can most easily be seen by looking at the LabelsChangeOpDeserializer.

public function createEntityChangeOp( array $changeRequest ) {

$changeOps = new ChangeOps();

foreach ( $changeRequest['labels'] as $langCode => $serialization ) {

$this->validator->validateTermSerialization( $serialization, $langCode );

$language = $serialization['language'];

$newLabel = ( array_key_exists( 'remove', $serialization ) ? '' :

$this->stringNormalizer->trimToNFC( $serialization['value'] ) );

if ( $newLabel === '' ) {

$changeOps->add( $this->fingerprintChangeOpFactory->newRemoveLabelOp( $language ) );

} else {

$changeOps->add( $this->fingerprintChangeOpFactory->newSetLabelOp( $language, $newLabel ) );

}

}

return $changeOps;

}Code language: PHP (php)All ChangeOps that are created from the user JSON have some validation (label example) that runs and are then applied to a PHP object (label example), edit summaries are built.

This same pattern is used in all existing Wikibase action API modules.

Level 2: The Business

EditEntity in the business layer, (sorry for the confusing duplicate names in the blog post), is responsible for taking Wikibase entities and storing them within MediaWikis storage mechanisms. The interface is simple and takes an entity, summary, token, and some other small flags and options.

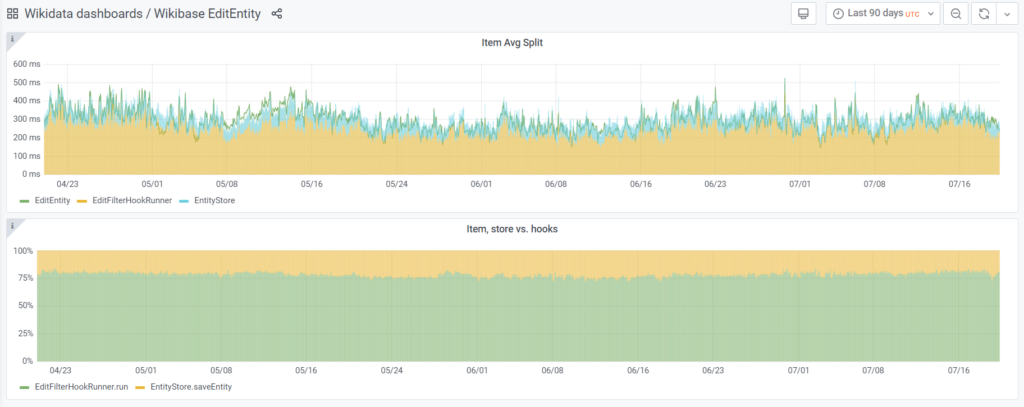

The “real” implementation of this interface is called MediaWikiEditEntity. And this is also wrapped within a metric gathering implementation. You can find the live data for this for wikidata.org on the Wikimedia grafana install.

MediaWikiEditEntity::attemptSave is called and:

- The edit token is validated

- edit permissions are checked

- rate limits are also checked

- a check for previous revisions for this entity occurs

- edit conflict logic is skipped as we are making a new entity (no conflicts possible)

- edit filter hooks run

- the entity store gets called to store the entity (the next layer of “magic”)

- MediaWiki watchlist is updated if needed

- finally the result is returned to the API.

There is nothing amazing going on here, for for certain usecases (such as bulk imports), the list of areas for optimization continues to grow. Do such usecases care about edit permissions, rate limits, tokens, edit filters and watchlists?

A quick segway into EditFilter Hooks

The EditFilter hooks related to a MediaWiki hook called EditFilterMergedContent. Any number of extensions on a Wikibase could call this hook, but the most common cases are likely things such as AbuseFilter, SpamBlacklist and ConfirmEdit.

The main Wikibase code implementation to look at is called MediaWikiEditFilterHookRunner.

Extensions that use this hook can ultimately do whatever they want at this stage. Abusefilter for example ultimately converts the PHP entity object into a large text string for processing using the getTextForFilters method using its own AbuseFilter-contentToString hook that Wikibase registers and implements. You can see an example of the resulting string for a rather small Item in this test file Any user provided abuse filters then run across this large block of text (including regular expressions which can be expensive).

The majority of time spent saving entities on wikidata.org as part of this business logic is spent in these edit filter hooks, currently at roughly 75% of save time, with less than 25% actually being spent storing the entities. You can see live data for wikidata.org on the Wikimedia grafana install.

Level 3: Wikibase persistence time

We are now in the Wikibase persistence later, entering via the EntityStore interface. It’s pretty simple, allowing saving and deleting of entities, as well as id acquisition, watchlist changes and poking redirects.

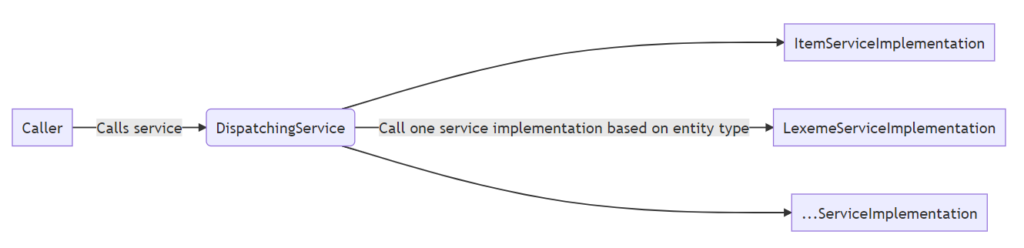

Each entity type can choose how to be stored, and the main EntityStore implementations dispatches to the chosen service by entity type. You can read a little more about this dispatching pattern in the architecture documentation.

But in current entity cases the primary WikiPageEntityStore implementation is used (with the exception of the sub entities form and sense for lexemes).

The WikiPageEntityStore::saveEntity method quickly calls the saveEntityContent method which then:

- gets a page from MediaWiki to store the entity in

- updates the page using a PageUpdater

- and tells the updater to save the revision

- returning the persisted revision to the layer above

You might also spot a dispatch call for entityUpdated which right now updates some Wikibse caches with the persisted entity, though most of this area of caching is being phased out.

Level 4: MediaWiki PageUpdater

The PageUpdater interface is fairly new in MediaWiki terms, being introduced in the last couple of years. Some basic docs exist on mediawiki.org. We call PageUpdater::saveRevision, so lets start looking there. We have:

- some more permission checks

- figuring out which parts of the page are being updated

- prepare some updates that will happen at some point

- Hook onMultiContentSave (mediawiki.org docs)

- create the page

- return up the call tree

There are some more details hidden in the create page step which we can see in the doCreate method. This is where we actually see things get written to the database:

- create a new revision

- insert the page record

- insert the revision record

- update the page record with the new revision

- Hook onRevisionFromEditComplete (mediawiki.org docs)

- insert a recent changes record (POST SEND)

- increment the user edit count (POST SEND)

- insert page creation log entry if enabled (enabled by default)

- do DerivedDataUpdater updates

- Hooks onPageSaveComplete (mediawiki.org docs)

You’ll notice a lot of hooks which I can’t really dive into in much detail here. You can find an overview of the MediaWiki hook system on mediawiki.org, and any number of extensions can implement any number of hooks to do any number of things.

You’ll also notice 2 steps above marked as POST SEND. These are part of the MediaWiki Deferred update system, which is outlined below.

It allows the execution of some features at the end of the request, when all the content has been sent to the browser, instead of queuing it in the job, which would otherwise be executed potentially some hours later. The goal of this alternate mechanism is mainly to speed up the main MediaWiki requests, and at the same time execute some features as soon as possible at the end of the request.

Deferred updates – mediawiki.org

Level 5: Derived data updates

At the bottom of the sequence, post initial commit to the database, at the derived data updates.

DerivedPageDataUpdater::doUpdates is the place to look now.

- ParserOutput of the page is generated (Phabricator task for removing this from Wikibase edits)

- Secondary data updates are scheduled (POST SEND)

- Category membership job is scheduled if enabled

- Hook onArticleEditUpdates (mediawiki.org docs)

- Site stats are updated (POST SEND)

- A search update is scheduled (POST SEND)

- WikiPage::onArticleCreate HTML caches are purged for the page

- WikiPage::onArticleCreate HTML caches are purged for pages that link to the page (JOB)

After this has all finished, the API will give you your response.

Over the last 2 levels various things started being deferred to after the web request was sent (post send) or sent to the job queue for later processing. Most of these are kind of boring.

Level 6: Secondary data updates

The secondary data updates include a few more juicy details, so let’s keep going! Scheduled as post send, all of this will happen after the user receives a response, but will still happen as part of the web request.

RefreshSecondaryDataUpdate in MediaWiki is the hub of these secondary data updates. Secondary updates come from the derived page data updater that we were just looking at. These include:

- links table updates

- updates for specific types of content

- Hook onRevisionDataUpdates (the new way, mediawiki.org docs)

Wikibase makes use of secondary data updates to keep some of its secondary storage indexes updated. When you create items the secondary updates from from the ItemHandler, these include updating the term related tables and sitelinks. Properties use the PropertyHandler and update terms and property info.

[…] What happens in Wikibase when you make a new Item? […]

[…] What happens in Wikibase when you make a new Item?, by […]

[…] was around Wikibase importing speed, data loading, and the APIs. My previous blog post covering what happens when you make a new Wikibase item was raised, and we also got onto the topic of […]

[…] and MediaWiki logic happens, followed by writes to the database. For a detailed breakdown, see What happens in Wikibase when you make a new Item?, and excellent post by […]