Your own Wikidata Query Service, with no limits

The Wikidata Query Service allows anyone to use SPARQL to query the continuously evolving data contained within the Wikidata project, currently standing at nearly 65 millions data items (concepts) and over 7000 properties, which translates to roughly 8.4 billion triples.

You can find a great write up introducing SPARQL, Wikidata, the query service and what it can do here. But this post will assume that you already know all of that.

EDIT 2022: You may also want to read https://addshore.com/2022/10/a-wikidata-query-service-jnl-file-for-public-use/

Guide

Here we will focus on creating a copy of the query service using data from one of the regular TTL data dumps and the query service docker image provided by the wikibase-docker git repo supported by WMDE.

Somewhere to work

Firstly we need a machine to hold the data and do the needed processing. This blog post will use a “n1-highmem-16” (16 vCPUs, 104 GB memory) virtual machine on the Google Cloud Platform with 3 local SSDs held together with RAID 0.

This should provide us with enough fast storage to store the raw TTL data, munged TTL files (where extra triples are added) as well as the journal (JNL) file that the blazegraph query service uses to store its data.

This entire guide will work on any instance size with more than ~4GB memory and adequate disk space of any speed. For me this setup seemed to be the fastest for the entire process to run.

If you are using the cloud console UI to create an instance then you can use the following options:

- Select Machine type n1-highmem-16 (16 vCPU, 104 GB memory)

- Bootdisk: Google Drawfork Debian GNU / Linux 9

- Firewall (optional): Allow HTTP and HTTPS traffic if you want to access your query service copy externally

- Add disk: Local SSD scratch disk, NVMe (select 3 of them)

If you are creating the instance via the command line your command will look something like the one below. All other defaults should be fine as long as the user you are using can create instances, and you are in the correct project and region.

gcloud compute instances create wikidata-query-1 --machine-type=n1-highmem-16 --local-ssd=interface=NVME --local-ssd=interface=NVME --local-ssd=interface=NVME Code language: PHP (php)Initial setup

Setting up my SSDs in RAID

As explained above I will be using some SSDs that need to be setup in a RAID, so I’ll start with that following the docs provided by GCE. If you have no need to RAID then skip this step.

sudo apt-get update

sudo apt-get install mdadm --no-install-recommends

sudo mdadm --create /dev/md0 --level=0 --raid-devices=3 /dev/nvme0n1 /dev/nvme0n2 /dev/nvme0n3

sudo mkfs.ext4 -F /dev/md0

sudo mkdir -p /mnt/disks/ssddata

sudo mount /dev/md0 /mnt/disks/ssddata

sudo chmod a+w /mnt/disks/ssddataCode language: JavaScript (javascript)Packages & User

Docker will be needed to run the wdqs docker image, so follow the instructions and install that.

This process will require some long running scripts so best to install tmux so that we don’t loose where they are.

sudo apt-get install tmuxCode language: JavaScript (javascript)Now lets create a user that is in the docker group for us to run our remaining commands via. We will also use the home directory of this user for file storage.

sudo adduser sparql

sudo usermod -aG docker sparql

sudo su sparqlThen start a tmux session with the following command

tmuxAnd download a copy of the wdqs docker image from docker hub.

docker pull wikibase/wdqs:0.3.6Here we use version 0.3.6, but this guide should work for future versions just the same (maybe not for 0.4.x+ when that comes out).

Download the TTL dump

The main location to find the Wikidata dumps is on https://dumps.wikimedia.org partially buried in the wikidatawiki/entities/ directory. Unfortunately downloads from here have speed restrictions, so you are better of using one of the mirrors.

I found that the your.org mirror was the fastest from us-east4-c GCE region. To download simply type the following and wait. (At the end of 2018 this was a 47GB download).

Firstly make sure we are in the directory which is backed by our local SSD storage which was mounted above.

cd /mnt/disks/ssddataAnd download the latest TTL dump file which can take around 30 minutes to an hour.

wget http://dumps.wikimedia.your.org/wikidatawiki/entities/latest-all.ttl.gzCode language: JavaScript (javascript)Munge the data

UPDATE 2020: To munge quicker using hadoop read this blog post.

Munging the data involves running a script which is packaged with the query service over the TTL dump that we have just downloaded. We will use Docker and the wdqs docker image to do this, starting a new container running bash.

docker run --entrypoint=/bin/bash -it --rm -v /mnt/disks/ssddata:/stuff wikibase/wdqs:0.3.6Code language: JavaScript (javascript)And then running the munge script within the internal container bash. This will take around 20 hours and create many files.

./munge.sh -c 50000 -f /stuff/latest-all.ttl.gz -d /stuff/mungeOutThe -c option was introduced for use in this blog post and allows the chunk size to be selected. If the chunk size is too big you may run into import issues. 50000 is half of the default.

Once the munge has completed you can leave the container that we started and return to the virtual machine.

exitCode language: PHP (php)Run the service

In order to populate the service we first need to run it using the below command. This mounts the directory containing the munged data as well as the directory for storing the service JNL file in. This will also expose the service on port 9999 which will be writable, so if you don’t want other people to access this check your firewall rules. Don’t worry if you don’t have a dockerData directory, as it will be created when you run this command.

docker run --entrypoint=/runBlazegraph.sh -d \

-v /mnt/disks/ssddata/dockerData:/wdqs/data \

-v /mnt/disks/ssddata:/mnt/disks/ssddata \

-e HEAP_SIZE="128g" \

-p 9999:9999 wikibase/wdqs:0.3.6Code language: JavaScript (javascript)You can verify that the service is running with curl.

curl localhost:9999/bigdata/namespace/wdq/sparqlCode language: PHP (php)Load the data

Using the ID of the container that is now running, which you can find out by running “docker ps”, start a new bash shell alongside the service.

docker exec -it friendly_joliot bashNow that we are in the container running our query service we can load the data using the loadData script and the previously munged files.

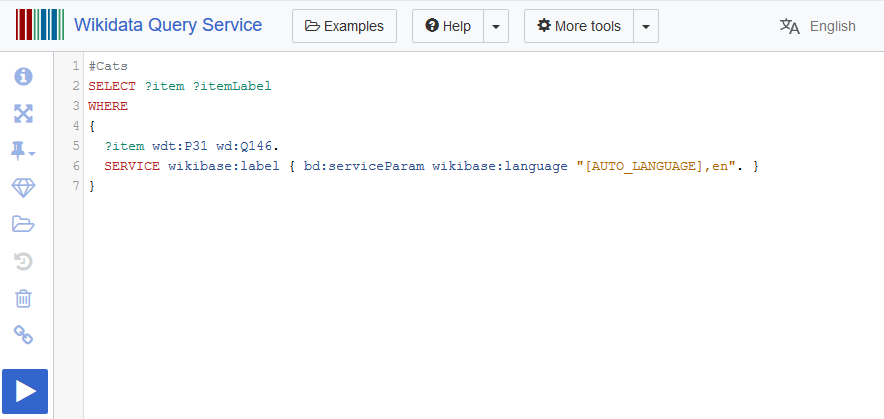

/wdqs/loadData.sh -n wdq -d /mnt/disks/ssddata/mungeOutOnce the the data appears to be loaded, you can try out a shorter version of the cats query from the service examples.

curl localhost:9999/bigdata/namespace/wdq/sparql?query=%23Cats%0ASELECT%20%3Fitem%20%3FitemLabel%20%0AWHERE%20%0A%7B%0A%20%20%3Fitem%20wdt%3AP31%20wd%3AQ146.%0A%20%20SERVICE%20wikibase%3Alabel%20%7B%20bd%3AserviceParam%20wikibase%3Alanguage%20%22en%2Cen%22.%20%7D%0A%7DTimings

Loading data from a totally fresh TTL dump into a blank query service is not a quick task currently. In production (wikidata.org) it takes roughly a week, and I had a similar experience while trying to streamline the process as best I could on GCE.

For a dump taken at the end of 2018 the timings for each stage were as follows:

- Data dump download: 2 hours

- Data Munge: 20 hours

- Data load: 4.5 days

- Total time: ~5.5 days

Various parts of the process lead me to believe that this could be done faster as throughout CPU usage was pretty low and not all memory was utilized. The loading of the data into blazegraph was by far the slowest step, but digging into this would require someone that is more familiar with the blazegraph internals.

Blog post series

This blog post, and future blog posts on the topic of running a Wikidata query service on Google cloud, are supported by Google and written by WMDE.

Part 2 and 3 will speed up this process and use the docker images and a pre-generated Blazegraph journal file to deploy a fully loaded Wikidata query service in a matter of minutes.

Your loading wasn’t maximizing your CPU or Memory because you forgot to add the following into the loadData.sh file and configure properly:

com.bigdata.rdf.store.DataLoader.flush=false

com.bigdata.rdf.store.DataLoader.bufferCapacity=100000

com.bigdata.rdf.store.DataLoader.queueCapacity=10

Read more here: https://wiki.blazegraph.com/wiki/index.php/Bulk_Data_Load#Configuring

Also you can even tweak the database instance:

/opt/RWStore.properties

See more options here: https://wiki.blazegraph.com/wiki/index.php/Configuring_Blazegraph

and here: https://wiki.blazegraph.com/wiki/index.php/PerformanceOptimization#Batch_is_beautiful

Thanks!

I knew that I was missing things but really needed to get this long overdue post up.

I look forward trying it out with some alternate settings!

I’ll be sure to follow up here when I do.

Did you get to try out some of the DataLoader options? or other options mentioned in the Blazegraph wiki?

Not yet! I still haven’t written the follow-up to this post yet. But I hope that I will soon, it will include using hive / bigtable to do the munge step in a matter of minutes, and also looking into the options to make the load faster.

I would be very interested in the follow-up. Do you have any estimation on its arrival?

Noob question: How do I implement the flush, buffer, and queue configs in loadData.sh? I am using wdqs 0.3.10 image that comes with the Wikibase docker-compose setup.

There’s a few places you COULD add configs for Blazegraph, depending on the config needed (logging, memory, blazegraph flush, buffering, etc.etc.) Some of this is already detailed here: https://github.com/blazegraph/database/wiki/Configuring_Blazegraph as well as the many replies that Bryan Thompson (primary Blazegraph dev) has explained within the forum here: https://sourceforge.net/p/bigdata/discussion/676946/

And since Blazegraph is Java, you can set any -D system properties and Blazegraph will use them. You can also set the Blazegraph internal properties (com.bigdata…) directly after the blazegraph.jar as options or within it’s various .properties files. https://github.com/blazegraph/database/wiki/Configuring_Blazegraph

For instance:

java -server -Xmx4g -jar -Djava.util.logging.config.file=logging.properties blazegraph.jar Dcom.bigdata.rdf.sparql.ast.QueryHints.analyticMaxMemoryPerQuery=8G

Is there a place where we could download the Blazegraph instance with the dataset loaded? Unfortunately we need a local instance running but we don’t have enough computing power to perform the loading process.

Not yet!

There’s a lot of good information for Bulk Data Loading within Blazegraph Wiki if you just search it… Like I did here: https://github.com/blazegraph/database/search?q=bulk+data&type=Wikis The first to look at is your mode of inference, Incremental Truth or Database at once closure. Incremental truth maintenance is fast for small updates. For large updates it can be very expensive. Therefore bulk load should ALWAYS use the database-at-once closure method. https://github.com/blazegraph/database/wiki/InferenceAndTruthMaintenance#configuring-inference

Thanks for your post. It is fascinating see this work end to end. However, it will be great to have a “baby step” like with a much smaller dataset to get started, say a dataset that can be unloaded, munged and loaded in under 5 minutes :)

[…] This is part 2 in a series of blog posts about running your own copy of the Wikidata Query Service. If you missed the first part, check it out here. […]

[…] Your own Wikidata Query Service, with no limits (was #16) […]

[…] means that you can run your own query service, without running a Wikibase at all. For example, you can load the whole of Wikidata into a query service that you operate, and have it stay up to date with current events. Though in practice this is quite some work, and […]

[…] in 2019 I wrote a blog post called Your own Wikidata Query Service, with no limits which documented loading a Wikidata TTL dump into your own Blazegraph instance running within […]