Back in 2019 I wrote a blog post called Your own Wikidata Query Service, with no limits which documented loading a Wikidata TTL dump into your own Blazegraph instance running within Google cloud, a near 2 week process.

I ended that post speculating that part 2 might be using a “pre-generated Blazegraph journal file to deploy a fully loaded Wikidata query service in a matter of minutes”. This post should take us a step close to that eventuality.

Wikidata Production

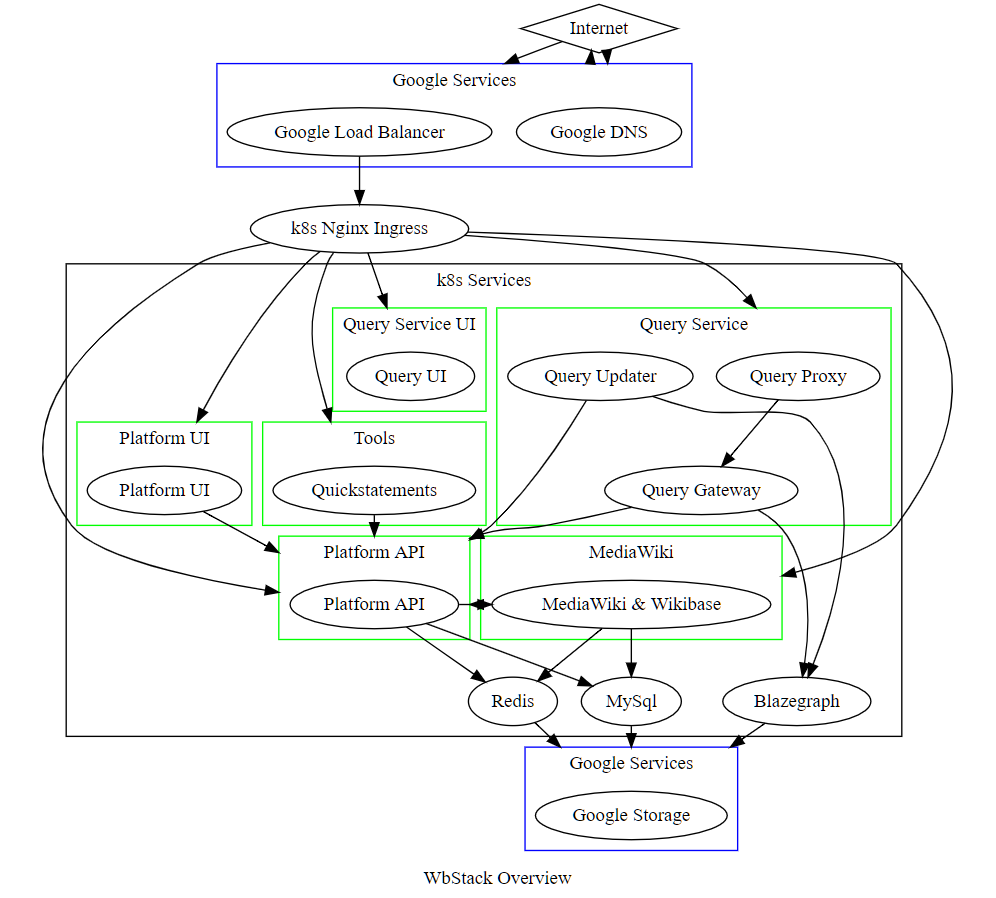

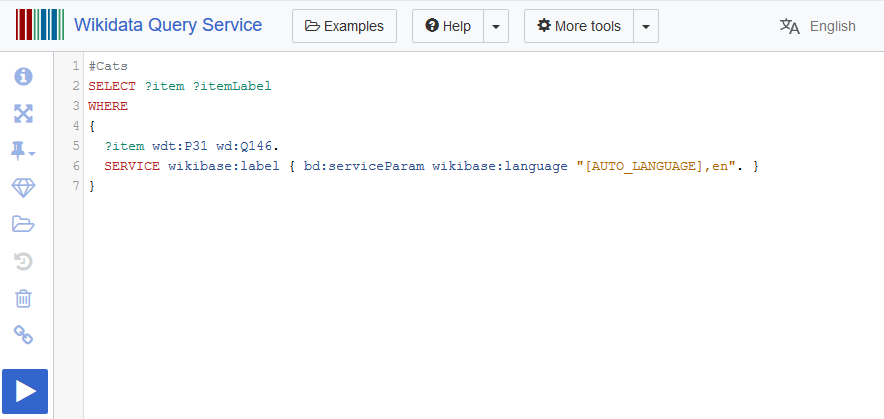

There are many production Wikidata query service instances all up to date with Wikidata and all of which are powered using open source code that anyone can use, making use of Blazegraph.

Per wikitech documentation there are currently at least 17 Wikidata query service backends:

- public cluster, eqiad: wdqs1004, wdqs1005, wdqs1006, wdqs1007, wdqs1012, wdqs1013

- public cluster, codfw: wdqs2001, wdqs2002, wdqs2003, wdqs2004, wdqs2007

- internal cluster, eqiad: wdqs1003, wdqs1008, wdqs1011

- internal cluster, codfw: wdqs2005, wdqs2006, wdqs2008

These servers all have hardware specs that look something like Dual Intel(R) Xeon(R) CPU E5-2620 v3 CPUs, 1.6TB raw raided space SSD, 128GB RAM.

When you run a query it may end up in any one of the backends powering the public clusters.

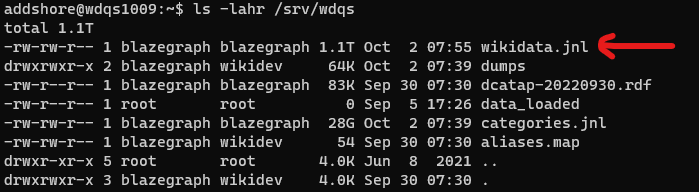

All of these servers also then have an up-to-date JNL file full of Wikidata data that anyone wanting to set up their own blazegraph instance with Wikidata data could use. This is currently 1.1TB.

So let’s try and get that out of the cluster for folks to use, rather than having people rebuild their own JNL files.